Blockchain performance is poor but rapidly improving

Until this point, blockchains have felt a lot like dial-up internet. Slow, clunky, and with very limited use cases. Dial-up internet speeds had a maximum of 56 kb/s which is shockingly slow by today’s standards. 56 kb/s was only enough to do some very basic internet browsing like loading up your favorite web portal, sending emails, or downloading a low-res image very slowly.

In the 1990s, 56kb/s dial-up speeds were adequate because people didn’t spend much time on the internet or have the need to share massive amounts of data in high resolution images and videos. People did not realize how slow internet speeds were because dial-up was the fastest that existed at the time and the early use cases & applications were bottlenecked by the performance of the underlying network.

Ironically, Bitcoin propagates far less data than dial-up. Bitcoin does a ~1mb block every 10 minutes (600 seconds) or about 1.6 kb/s. This is in great part due to the preferences of early blockchain designers and adopters who opted to solve for redundancy and decentralization over performance. The preferences of users are shifting and the preferences of early crypto adopters are no longer as applicable to the middle curve of mass adopters.

Similar to the early internet, most blockchains are slow today but will increase performance rapidly over time. Next generation blockchains are able to benefit from massive performance upgrades as compute power and internet speeds ramp up.

We keep packing more and more transistors on a single computer chip, but don’t increase the clock speed. Instead, we increase computational throughput by using those transistors to pack multiple processors onto the same chip. This is referred to as multicore. Thus, multicore performance doubles roughly every 2 years. According to Nielsen’s Law, internet speeds also double approximately every 2 years.

Unlike legacy technologies, next generation blockchains are able take full advantage of these leaps in multicore performance and bandwidth. It is very difficult to make the case that blockchains like Ethereum that do not scale with modern, multicore hardware will be able to keep up with the performance of newer blockchains that do. The often touted L2 solution is not unique to Ethereum, and the data compression and transaction batching of L2s can be applied to a much more performant blockchain for the same % increase in performance.

Block space is the most valuable resource, blocks are data

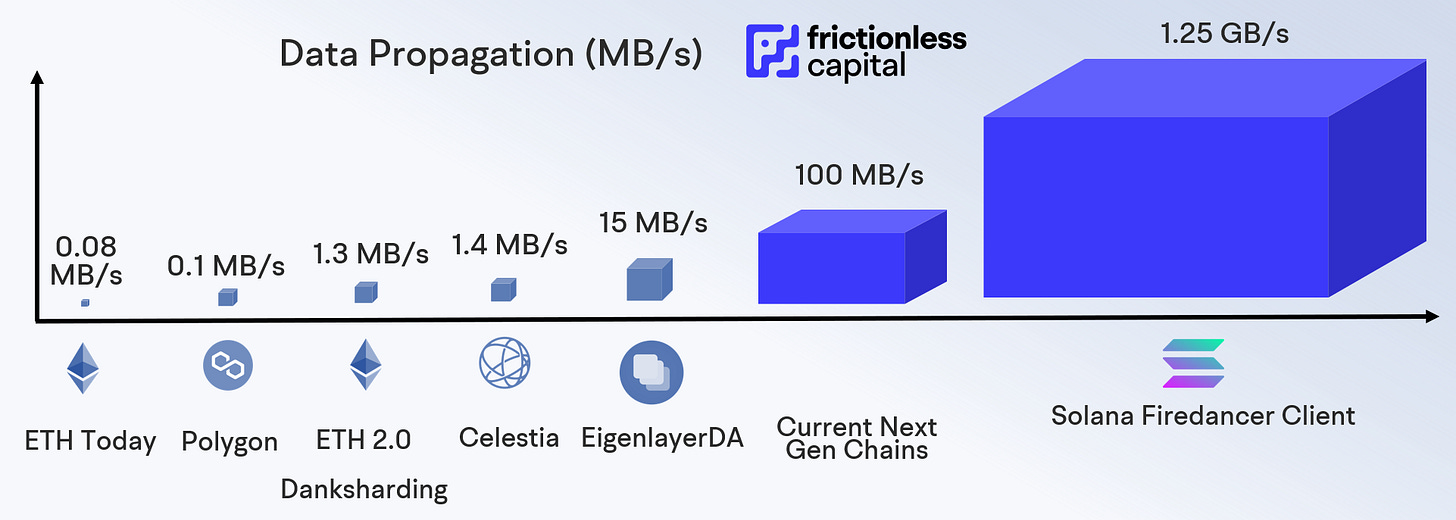

There are a number of features of highly performant blockchains, but for the purpose of this article we will focus on data propagation. Internet bandwidth will likely continue to be the gating factor of internet experience for users.

In blockchain, block space is one of the most valuable and important resources because it gives the ability to write to a block and submit it as transaction to all nodes for approval. It’s important to note that an identical transaction on one chain will not use the same amount of data as one on another, there is no standard amount of data a transaction will use. However, if a chain propagates more data (bigger blocks, faster), it will generally be able to process more transactions per second and support a greater amount of activity on the network. High throughput is a feature of performant, next generation blockchains (traditionally measured by transactions per second or TPS). Unlike a single application, blockchains will support hundreds or even thousands of applications in an entire ecosystem of products and applications running on a single network. We can conceivably say that demand for block space will increase exponentially in the coming years as more useful and popular applications and use cases are developed. Without high throughput, access to scarce block space becomes very expensive when demand surges. We have seen this phenomenon illustrated painfully during Yuga Lab’s Otherside deeds mint, when gas fees exceeded 5 ETH or ~$14,000 USD per transaction.

If block space is data, people will demand exponentially more and more data as better applications are built. Let’s compare some popular blockchains to see how much data they are propagating to nodes per second. Barring some recent innovations and exceptional cases, the majority of blockchains need to propagate data to 2/3rd+1 or 66% of all nodes to achieve consensus. If consensus is not reached, a transaction is not finalized (completed).

Bitcoin is propagating a minuscule amount of data. This is consistent with the BTC ethos of hard money that can survive a nuclear winter, but it comes at a huge cost for performance. As for Ethereum, even after sharding, which is the main throughput increase planned on the ETH 2.0 roadmap in 2023 or 2024, ETH will initially be propagating a small fraction of the data that next generation chains are already doing today. This severely impacts the scalability of Ethereum. Even if 100% of the initial 1.3mb/s is used for ZK (zero knowledge) L2 rollups batching hundreds of transactions into one, this level of data propagation and 100,000 TPS is likely not compatible with mass adoption if tens or hundreds of thousands of applications and millions of users all attempt to write to the same block simultaneously.

Legacy blockchains that do not scale with modern hardware and increasing bandwidth will struggle to compete with next generation blockchains that do

Poor data propagation handicaps the scope of application & product engineers’ innovations, making mass adoption very difficult. Engineers are forced to solve for the limitations of the blockchain they are building on by minimizing interactions with the chain to lower cost for users. Product and application engineers should be able to innovate freely on blockchains without having to worry about the cost and speed of the network they are building on. Next generation blockchains enable this.

Simply put, until we move past legacy architectures like Ethereum and Bitcoin to more performant chains, it will be very difficult to build applications with global reach & scale that are useful to consumers, severely limiting mass adoption of crypto.

Not all is lost. Today, these performance constraints are beginning to be being removed and we are being ushered into a new generation of blockchains. We will see levels of data propagation, synchronicity, and the effects of composability, and unbounded application development onboarding billions of users.

The world is not static. The only constant is change, and you need to continually adapt blockchains to scale with modern hardware and increasing internet speeds. The only limitations to innovation and design should be the upper bound of the limits of physics. Light can travel around the Earth in just 133 milliseconds. Until block times are as close to 133 ms as possible, with the highest amount of data propagation available, blockchain tech can be pushed further. This race has only just begun…

Legal Disclaimer:

This article does not constitute investment advice and is not intended to be relied about as the basis for an investment decision, and is not, and should not assumed to be complete. The contents are provided for informational purposes only and are not to be construed as advice, any prospective investor should conduct their own research and could lose all or a substantial portion of its investment.